In recent months, we've seen a significant disruption in the visual art world thanks to the integration of AI. The music business is next in line!

Laymen like me have begun using diffusion-based AI models to generate stunning, one-of-a-kind pieces. Artists creating the original work used for training hyperventilated feeling an existential threat by AI: since January 13th, 2023 there is a class-action lawsuit filed against AI tools.

Soon, it's time for the music industry to experience the same revolution.

The Music Industry's Ticking Clock: Why the AI Revolution has not hit the music business. Yet.

Training generative AI models on audio data is generally considered to be more challenging than training on image data. One reason for this is the complexity of the audio signal itself. Audio is a time-series data, meaning that it is a continuous stream of data that changes over time. In contrast, images are static, meaning that they are composed of pixels that are fixed in place. Additionally, audio signals are often recorded in stereo, which adds an extra dimension to the data.

So while it is already challenging to create 5 seconds of well sounding music, its even harder to create a 3 min 30 sec piece: musical pieces are composed of long stretches of coherent sections (bridge, chorus, verse, etc.) that are repeated over the course of minutes.

Old ways to generate music: Playing Legos

Previous attempts at automating the music creation mostly focused on procedurally generating music by rearranging and semi-randomly piecing together small chunks of pre-recorded samples or notation from big libraries of conventionally composed music. An example of 10h output from the math-metal world is shown below with an explanation of the coding behind here.

Nice, but mostly works for music that is... well... math-metal.

New way of generating music: From scratch

The original tech is from 2016 and cited over a 1000 times, but a picture says more than a thousand words:

As you see, this is a fundamentally different way of dealing with this topic which opens up completely new tools and methods.

Don't Be Left Behind: The Future of Music is Here and Now

For many, the idea of incorporating AI into their music-making process may seem daunting. This beginner's guide to using AI music tools aims to demystify the process and show how easy it can be for anyone to start experimenting with these cutting-edge technologies. From AI creation from scratch to AI-plugins for your DAW like Ableton, we'll explore the various tools available and provide tips on how to use them to enhance your music-making process.

From sample generation to AI-VST plugins to composition: AI Tools for Every Stage of the Music-Making Process

You will find the cutting-edge of current AI-based music generation:

- How to generate infinite amount of samples from your or from text along actual AI generated music samples

- How to create an "any sound to specfic instrument" plugin

- How to use AI to create midi-tracks

- How to master your tracks similar to your favorite artist

Fine-tune a sample generation AI from audio snippets - Harmonai

What you will get: a custom AI-model that generates samples from your favorite artist or sounds

.png?format=1500w)

The Harmonai.org project is sponsored by Stability.ai which released StableDiffusion, one of the currently famous and sued AI models for image generation. You can take audio of an artist you like (e.g. Daft Punk), train an AI, and then generate samples that create new audio snippets in the style of Daft Punk.

Below is a quick guide on how to get started:

- Sign up to the discord to stay on top of what is happening in this very active community

- Grab a bunch of audio files e.g. your own music

- Chop the input data into training-chunks using this colab notebook

- Fine-tune your model

- Generate your samples

What I did: Grabbed a Daft-Punk model checkpoint and finetuned with the currently unpublished album of Monika Roscher. It took me 14 training runs (colab keeps kicking non-paying users out after 2-4h of training) of training this 450Million parameter model for 20+ epochs, each about an hour of GPU time.

Does it sound great? No. Is it impressive that this comes out of a magic box literally from noise 3 months after the project has started? Hell yeah!

Currently, training is limited to 4sec input samples. This means no broader context (Bridge, Verse, Chorus...) can be encoded and also the sampled sounds are more suitable for dubstep than to other types of music. Lots to be improved, but as with everything else with AI nowadays progress will be quicker than expected I guess. I'll just keep training and hoping for the best :D

One user of the Discord summarized it well:

"I see huge potential for a songwriting tool here, if one could generate a hundred songs a batch using this method, then filter out the bad apples and save good pieces to a folder, the good clips can be added to the training data and thus the dataset can be multiplied over time using the model outputs itself"

Others have been much more successful (Source)

Create audio samples from text - Riffusion

What you will get: text-to-music interface

Try it yourself by typing a genre of music you would like to hear on Riffusion.com. Spotify should rethink their business model ;)

I especially love their about section.

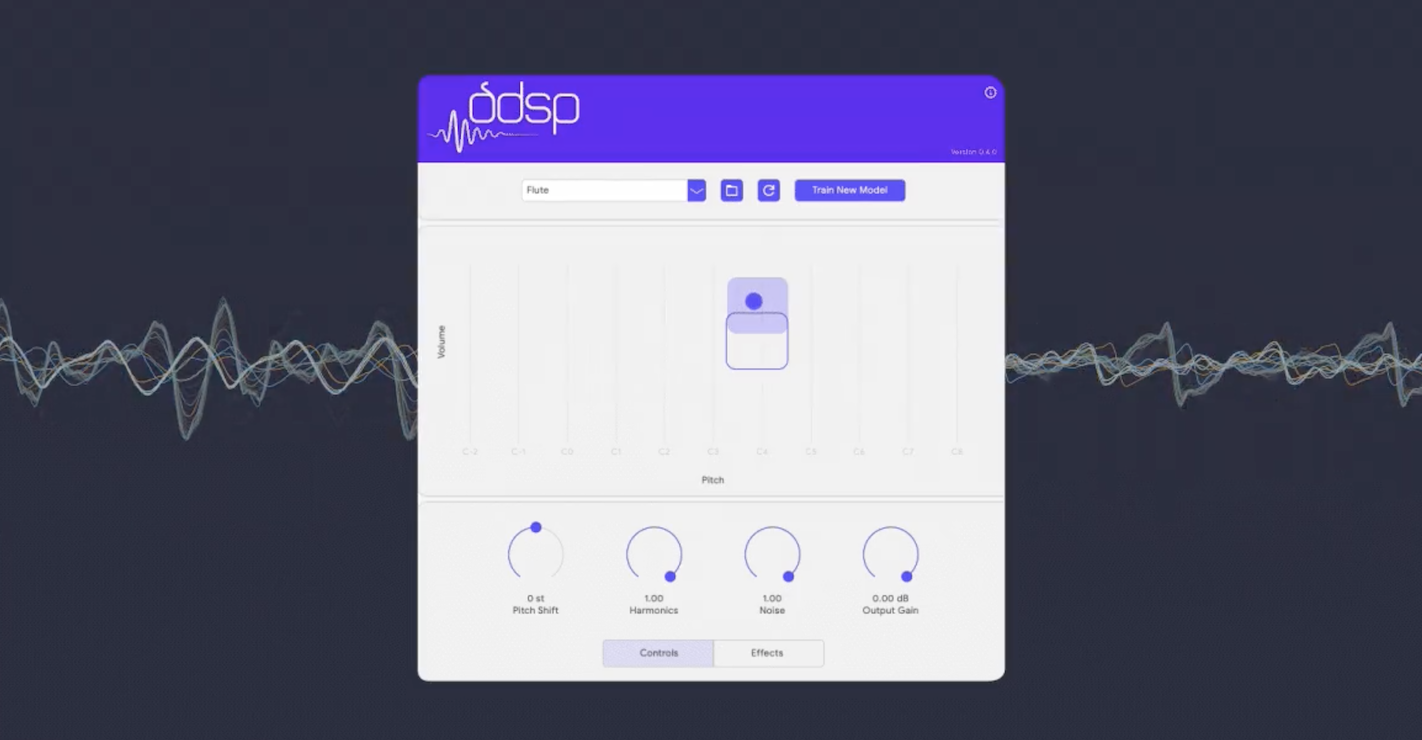

Create an AI-based instrument as a VST-plugin

What you will get: a trainable VST-plugin that morphs any sound into several instruments

This tool "Transforms the familiar into the unexpected". It morphs audio into a range of different instruments. Unlike MIDI notes, it preserves the nuances of pitch and dynamics for expressive neural synthesis. Long story short: Guitar in, trumpet out.

I would actually say it unlocks a whole new world to instrumentalists that do not play piano.

If you are an instrumentalist that has NOT learned piano, you have a hard time creating music with VST plugins as all of these are usually based on midi. Which requires notes... Which requires a keyboard... Which requires you to play the piano... A guitarist is by default locked out of this world except if you buy a midi-guitar.

As a result the whole world of cool audio generating plugins is locked away to the piano-nerds only. At the same time a piano is really bad at adding expression because of the binary nature of keys: pressed or not pressed. Velocity, aftertouch, etc. are nice but more physical instruments like guitar, saxophone and so forth just allow much better expression.

Having the expression of a physical instrument but still being able to morph the sound combines the best of both worlds. Pretty rad!

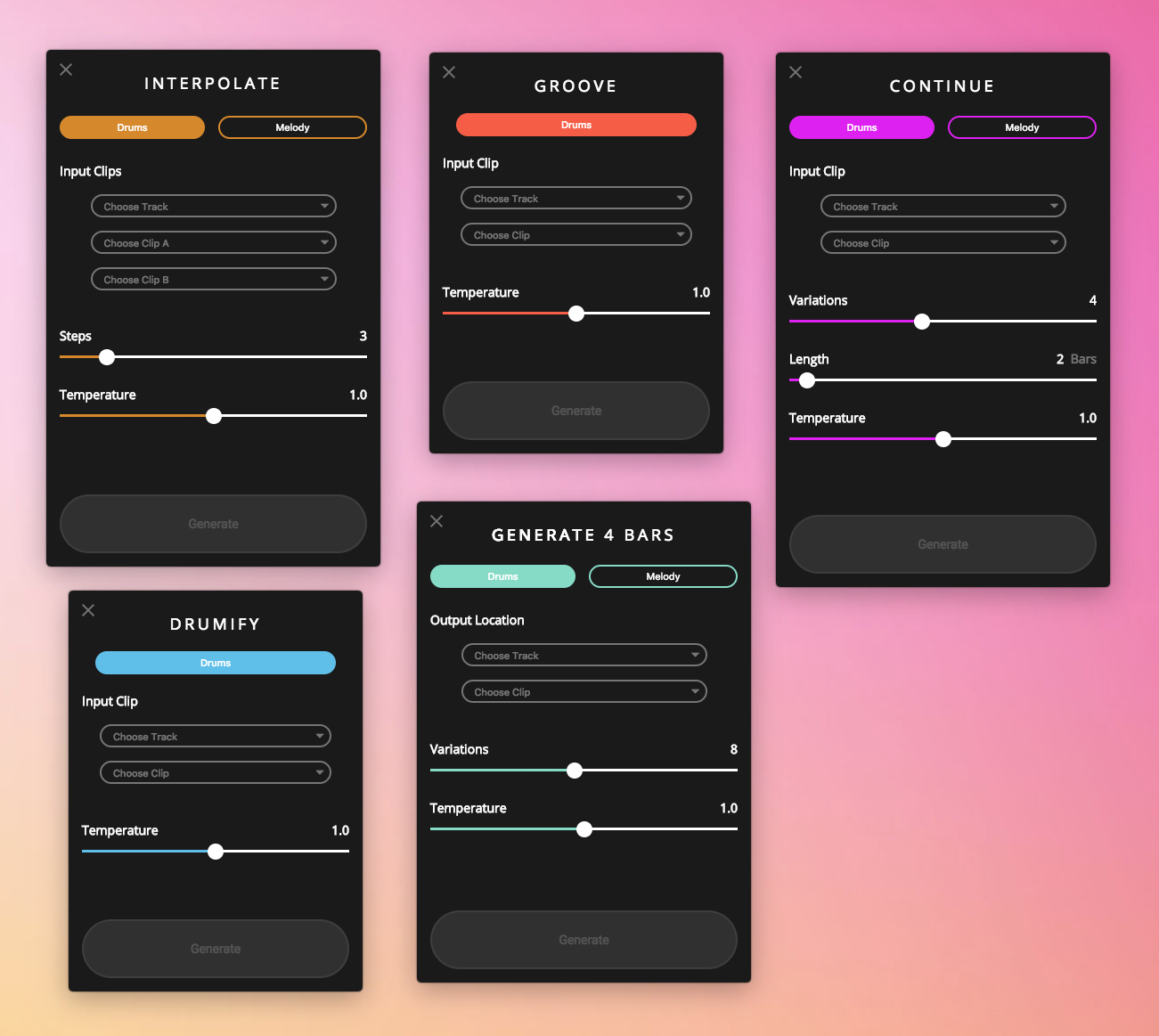

Create midi

What you will get: AI generated midi-tracks

This suite contains 5 tools: Continue, Groove, Generate, Drumify, and Interpolate, which let you apply Magenta models on your MIDI clips from the Session View.

From the website:

- Continue uses the predictive power of recurrent neural networks (RNN) to generate notes that are likely to follow your drum beat or melody.

- Generate is similar to Continue, but it generates a 4 bar phrase with no input necessary.

- Unlike the other plugins, Interpolate takes two drum beats or two melodies as inputs. It then generates up to 16 clips which combine the qualities of these two clips. It's useful for merging musical ideas, or creating a smooth morphing between them.

- Groove adjusts the timing and velocity of an input drum clip to produce the "feel" of a drummer's performance. This is similar to what a “humanize" plugin does, but achieved in a totally different way.

- Drumify creates grooves based on the rhythm of any input. It can be used to generate a drum accompaniment to a bassline or melody, or to create a drum track from a tapped rhythm.

If you are stuck in a blank-canvas creative block you can use these tools to draft ideas.

Mastering your track

What you will get: Your tracks mastered similar to your favorite reference track

Services such as Landr.com offer AI-based mastering of your finished tracks. Their output is really good and I used it frequently for my music, but currently all the free-options are gone from their website. If you still want to master your track for free using open-source tools you can use the following docker-based alternative.

Conclusion

AI will also disrupt the music industry. Soon.

Similar to visual Artists, musicians will first hyperventilate that their IP gets stolen, but later realize, that its actually a very handy tool for unlocking creativity and new ways of overcoming limitations of sample, the instrument you chose to focus on when you were 12 and your creative potential.

What a time to be alive :D