Introduction

In this blog article, we will delve into an innovative approach I adopted to enhance the accessibility of my PhD thesis. By leveraging the power of AI technology and utilizing a prompt interface, I transformed my thesis into a knowledge base that can be easily accessed and explored. This novel method allowed me to make my research findings more widely available and engaging to a diverse audience. Join me on this journey as we explore how I trained an AI chatbot with a custom knowledge base using the ChatGPT API.

I explored the topic of semantic search engines back in 2016, so it's really exciting to work with embeddings again!

Sneak peak of output

Thesis as haiku

Atoms jiggle and wiggle,

Quantum effects on biology,

Functional impact seen

Thesis explained to caveman

The thesis found that tiny things called atoms can make living things do different things. It can help us understand why living things do what they do. Scientists can now use special tools to measure jiggles and wiggles of atoms and see how they affect living things.

The Problem: Scientific Jargon limits accessibility

The problem of inaccessibility of complicated scientific subjects to a wide audience is a significant challenge that hinders the dissemination of knowledge and limits public engagement with important scientific discoveries. Complex scientific concepts, dense terminology, and technical jargon often create barriers that make it difficult for non-experts to grasp and appreciate scientific advancements. The lack of accessibility prevents individuals from different backgrounds, education levels, and interests from fully understanding and participating in scientific discussions. This divide between scientific experts and the general public not only limits the spread of knowledge but also inhibits the potential for collaboration, innovation, and informed decision-making on critical issues. Bridging this accessibility gap requires innovative approaches, such as leveraging technology, simplifying language, and utilizing interactive platforms, to make complex scientific subjects more understandable, relatable, and engaging for a wide audience. By breaking down these barriers, we can foster a society where scientific knowledge is accessible and inclusive, empowering individuals to be informed participants in shaping the future based on evidence and understanding.

The Solution: Harnessing AI Chatbots

To make my thesis accessible and engaging, I tapped into the capabilities of AI chatbot technology. By leveraging the ChatGPT API, I developed a custom chatbot that interacts with users through a prompt interface. This interface allows users to ask questions and explore the knowledge contained within my thesis in a conversational manner. By utilizing AI, I was able to create a dynamic and interactive experience for users, making my research more accessible and user-friendly.

From Novice to Expert: Navigating Scientific Concepts with Adjustable Difficulty

Furthermore, to cater to a wide range of audiences with varying levels of familiarity with scientific subjects, users can simulate different difficulty levels by manipulating the prompt interface. By adjusting the complexity of the questions or modifying the language used in the prompts, users can customize their interaction with the AI chatbot. This feature enables beginners to start with simpler explanations and gradually progress to more advanced topics, empowering them to develop a deeper understanding at their own pace. Conversely, experts can dive straight into complex discussions by formulating specialized queries. This flexibility in difficulty levels fosters inclusivity and ensures that individuals with diverse backgrounds and knowledge levels can benefit from the accessible nature of the AI chatbot, thereby bridging the gap between complex scientific subjects and a wide audience.

How to Build a Custom Knowledge Base

The Idea came by scrolling past a Reddit which promotes a service on knowledge base curation from your own data (e.g. PDFs). The github project is here.

The Input: My Thesis

I did my thesis in the Vaziri-lab of Neurobiology and Biophysics which now moved to Rockefeller NYC.

If you like, you can download the fulltext here:

Analyzing the content of the thesis shows that reading the thesis would take 1817 minutes or 30 hours. That's almost 4 full workdays...

So this is for sure a perfect example of a lot of effort that is just locked away with noone to ever read it again (as happens with most PhD thesis). It checks all the boxes for a good experiment:

- Novel: The content was never available to the public via the interwebz so it is not part of the existing GPT training set

- Specialized: A topic that is incredibly specific and niche which means its hard to access for laymen

- Diverse: Very long text with diverse content: Scientific text, python code, DNA sequences, experimental protocols etc.

Embedding setup

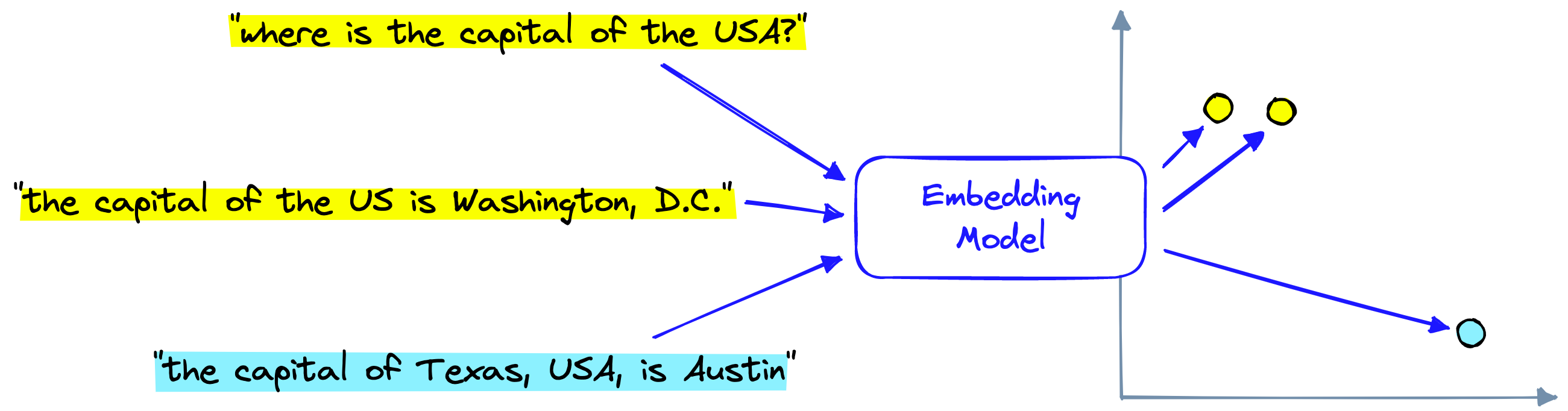

How the tech works is nicely explained in the Reddit post:

Comment

by u/MZuc from discussion I built an open source website that lets you upload large files, such as in-depth novels or academic papers, and ask ChatGPT questions based on your specific knowledge base. So far, I've tested it with long books like the Odyssey and random research papers that I like, and it works shockingly well.

in ChatGPT

A visual description of this explanation is shown in the below figure. Another application can be found in my previous post on the topic.

To embed my AI chatbot, I used a similar setup based on this blog post. Instead of installing python locally I made a colab notebook. Local python installations suck due to dependency management. I could get the script from the blog running using these dependencies:

!pip install gpt_index==0.4.24

!pip install langchain==0.0.132

!pip install openai==0.27.4

!pip install tiktoken==0.3.3

!pip install langchain==0.0.132

!pip install PyPDF2 PyCryptodome gradio Downside is, that the colab workbook times out quite quickly. This kills the webserver which means you and others cannot access the prompt interface and embedding anymore. You can save the index created from the pdf to your Gdrive so you don't have to redo this step next time at least.

The original approach in the Reddit post utilizing a vector database seems like the much better long-term solution. Another potential solution is hosting via Huggingface Spaces. Nonetheless, for this post I just wanted a quick proof of concept.

Setting up the GPT-API

After signing up first time to chat.openai.com you get 3 months free 5$ API access. As I signed up in December 2022, this free time was gone when logging in to https://platform.openai.com. This means I actually had to add my credit card details which brings us to costs.

Costs

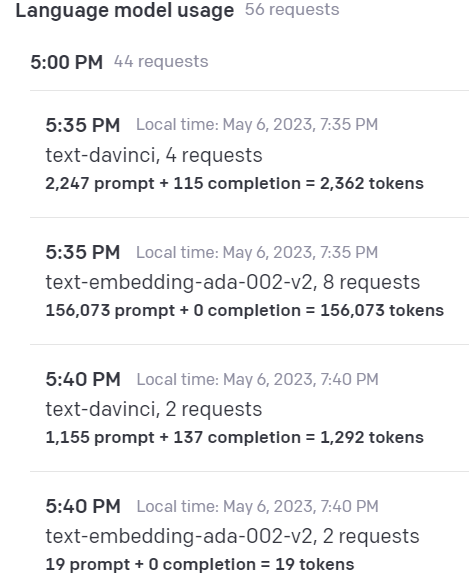

The use of gpt-3.5-turbo API is cheap and costs $0.002 / 1K tokens. By checking your usage you can calculate the costs:

156k tokens * 0.002$/1k tokens = 0.31$. That's 0.16 cent per page (the pdf is 186 pages although the online tool counted 300+). Harry Potter has 4100 pages and would cost almost 7$ to embed. This means its cheap for individual documents, but encoding a whole companies internal documentation would be probably pretty costly. Also GPT3.5 is not the pinnacle of technology: Encoding the same in GPT-4 can be up to 60x more expensive!

How safe is the content you upload?

OpenAI reserves the right to use ALL non-API prompts as future training data. You are making your data public by sending it to ChatGPT. OpenAI for now, says that content provided by API will not be used to train. But they still are keeping it. And that could change at any time in the future. see terms of use Section 3 C.

We may use Content from Services other than our API (“Non-API Content”) to help develop and improve our Services.

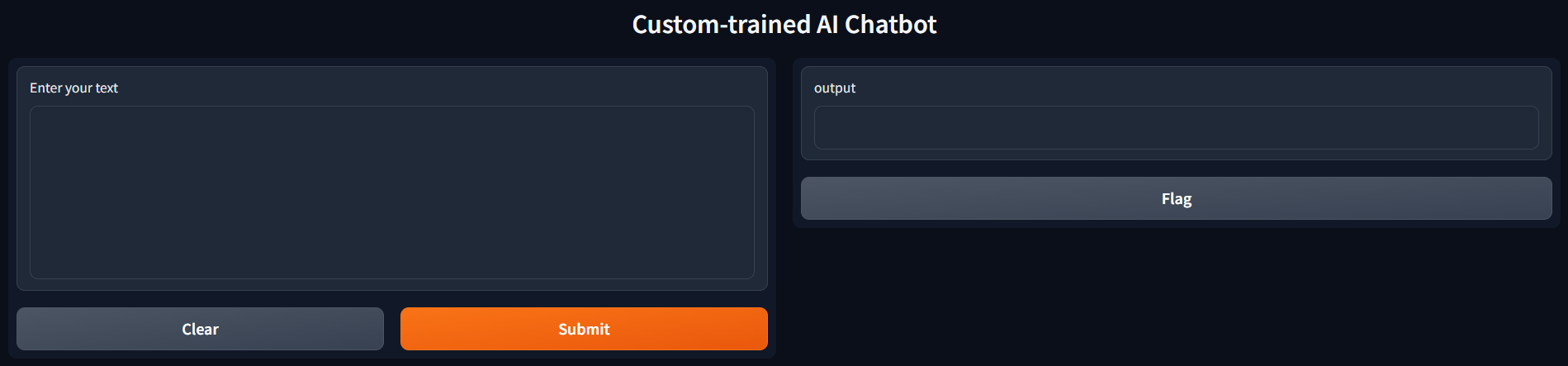

The interface

The whole machine-learning infrastructure exploded lately with cool apps like Huggingface. In this case I use Gradio which is "the fastest way to demo your machine learning model with a friendly web interface so that anyone can use it, anywhere!". Its true: Directly after training you'll get a link to a prompt-interface that is accessible to anyone with the link. This means your friends and family can directly start prompting.

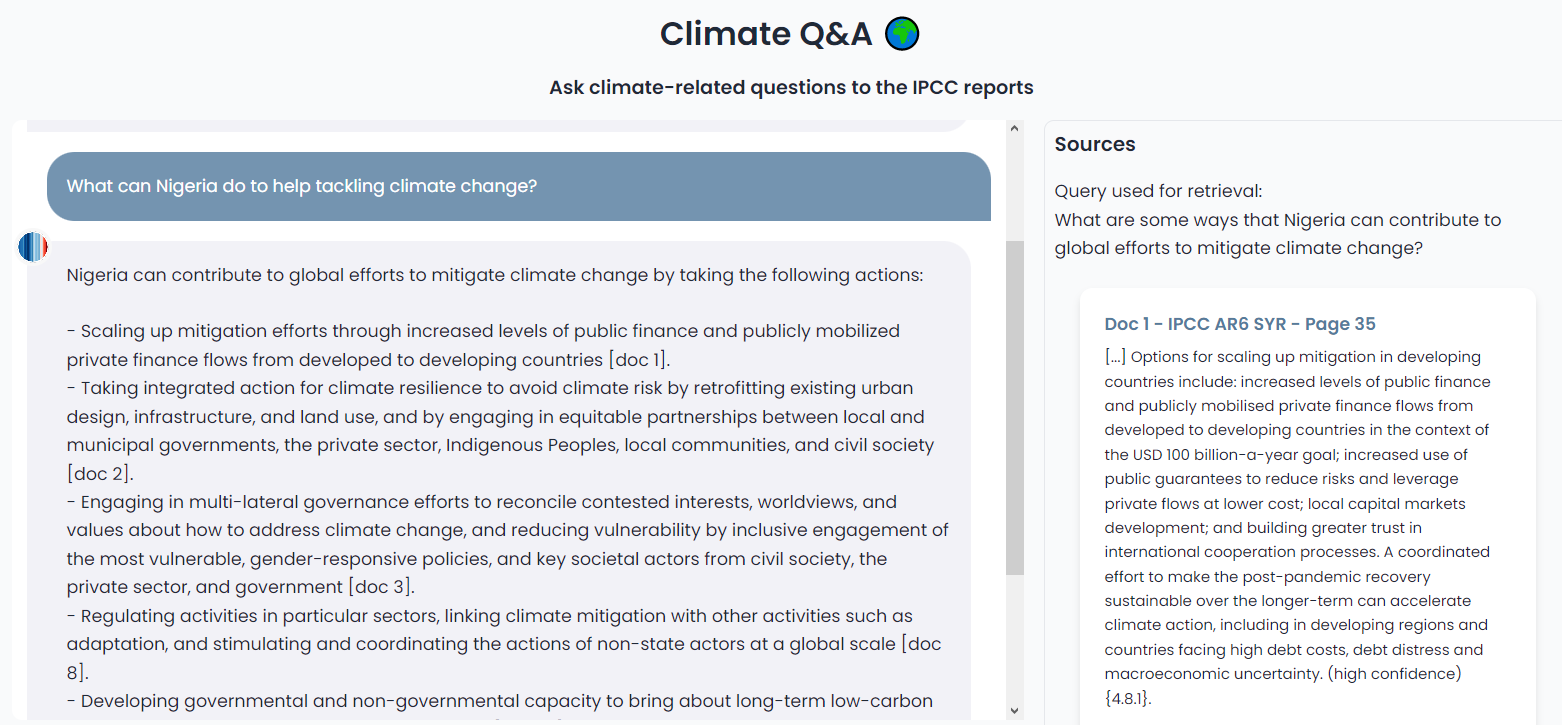

The prompt interface serves as the gateway for users to interact with my thesis knowledge base. Users can ask questions or seek specific information. The AI chatbot, powered by the ChatGPT API, then analyzed the user input and retrieved relevant information from the knowledge base. Limitation is, that there is no threading available, meaning this implementation does not remember the previous prompts.

After starting up I immediately sent out the link to the interface to a few friends of mine who were online and started prompting myself. The colab timed out after 1.5h due to inactivity ending the experiment as the server shut down.

Sign up to the newsletter to get new posts straight to your inbox!

Results

The experiments are split in 3 parts: 1) What laymen would ask given reading the abstract. For this I fed the abstract to GPT-4 and got a list of questions back that I used as input prompts for this custom model. 2) Experts wanting to retrieve technical information: Prompts derived by me as I know the content. 3) Asking the same question on different difficulty levels.

Results are in the following style:

Prompt

Response

--> Discussion

1) What laymen might ask

What is the main finding of the thesis?

The biggest finding of the thesis was the discovery of connections between the atomic world and biologically relevant macroscopic phenomena.

--> Correct to a degree. Many disclaimers apply!

How can this thesis be leveraged for business?

This thesis can be leveraged for business benefits by providing a better understanding of the potential functional impact of molecular interactions. This understanding can be used to develop new products and services that take advantage of quantum and vibrational effects, such as new drugs, medical treatments, and diagnostic tools. Additionally, the research can be used to improve existing products and services, such as improving the accuracy of medical diagnostics or developing more effective treatments.

--> OK but reads like high level bullshitting

How do researchers use labeled amino acids to study the brain's response to odors?

Researchers use labeled amino acids to study the brain's response to odors by carefully crafting an experimental setup that controls for any potential limitations. This setup involves repeating the stimulus with two identified amino acids (Lysine and Phenylalanine) and titrating the isotopomer content in the amino acids to measure the effect on signal strength (difference in brain behavior). This experimental paradigm can be used to screen the effect on signal strength dependent on the underlying structure and isotopomer count to rationalize the correlations between signal strength and IR-shift.

--> Correct - very nicely summarized. Maybe I wrote it like this? Maybe its good where I wrote good stuff and sucks where I was unclear in my formulation?

1) Conclusion: Its a very nice tool to explain different aspects and get a general understanding of what is in the thesis. Extrapolating from the results though results in generic high-level bullshitting that sounds nice, but does not contain much actionable information.

2) Retrieving technical information

You have to trust me on these questions.

What was the purpose of using C14 labeled aminoacids?

The purpose of using C14 labeled amino acids was to unambiguously assign spectral signature of 1,3 and 2,4 states in order to search for signatures of quantum resonances using 2DIR of KcsA

--> Correct

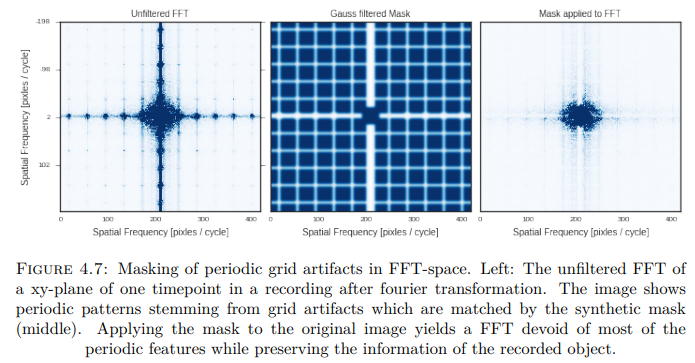

What is the FFT_Grid filter script for and in what part of the thesis was this script used in? Why was it important to filter the images? How do I use the script?

The FFT-Filter script is used to remove periodic grid artifacts from images in the thesis. It is used in the Results section (4.3) to mask the periodic patterns stemming from grid artifacts in the Fourier Transform of a xy-plane of one timepoint in a recording. This is important to preserve the information of the recorded object while removing the artifacts. The script is used by applying the mask to the original image, which yields a FFT devoid of most of the periodic features.

--> Medium - copy pastes sometimes the exact text but does not explain how to use it

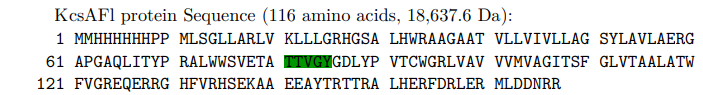

What is the KcsAFl protein Sequence?

The KcsA protein sequence is not provided in the context information.

--> Wrong

What is the DNA sequence of pSP64-tRNATyrCUA?

GAATACAAGCTTGGGCTGCAGGTCGACTCTAGAGGATCCCCCGCGGATCCGGATGGATCACCTGGTGGTGGGGGAAGGATTCGAATCTTCCGCTTCCTCGCTCACTGACTCGCTGCGCTCGGTCGTTCGGCTGCGGCG...

--> Partially correct. Not the full sequence given, only first 200 bases out of 2k. The sequence is correct though. Maybe a limitation of the maximum output lenght of the model? Could be a simple technical issue

What DNA sequences / proteins were used?

It is not possible to answer this question without prior knowledge.

--> Wrong. Dissapointing as there are plenty

Give me all python packages used in the thesis.

PyPDF2, PyCryptodome, certifi, greenlet, SQLAlchemy, packaging, marshmallow, dataclasses-json, typing-inspect, mypy-extensions

--> Wrong. Completely made up

What are you?

I am the acknowledgements section of a thesis.

2) Conclusion: Relying on precise technical information is tricky. 4/10 would not recommend in the current setup.

3) Explaining from novice to expert

How would you explain the main finding of the thesis to a diverse crowd from Reddit and Linkedin reading a blogpost on how an custom-trained AI chatbot can help them understand complex scientific questions? try using simple language and paraphrase complicated terms with analogies.

This thesis has found that there are no easy ways to detect and investigate quantum effects in biology. To understand these effects, scientists have had to develop and adapt new experimental methods, such as atom interferometry and ultrafast 2DIR. These methods allow scientists to make small changes at the atomic level and measure the effects on a larger scale, such as in neurons or the whole brain. With the help of custom-trained AI chatbots, people from all backgrounds can now understand these complex scientific questions and the findings of this thesis.

--> quite ok. Sometimes it also explains it with a car and motor analogy "turning a screw changes behavior of whole car" etc.

Same as above but "to an expert from the field".

Reiterates the abstract

--> make sense

3) Conclusion: Good for novice, but not great for Experts. If its a well written paper an expert will find what he/she needs in the abstract.

GPT4 output on same questions

The outcome sand its muuuuuch better compared to GPT3.5 although still sometimes completely wrong.

What is the page I am seeing about?

According to the current web page context, the page you are seeing is about a dissertation or doctoral thesis titled “Investigations of possible quantum and vibrational effects on biological function”. The author of the thesis is Christoph Götz MSc and the field of study is molecular biology. The thesis explores the potential functional impact of selected molecular interactions to explore the presence of vibrational or quantum effects on relevant timescales and their impact on higher order function in vitro and in vivo. The thesis uses methods across structural biology, molecular dynamics simulations, ultrafast spectroscopy, electrophysiology and neurobiology. The thesis was submitted in Vienna in 2022.

--> Much better and correct

How can this thesis be leveraged for business?

This is a difficult question to answer, as the thesis is mainly focused on fundamental research and not on practical applications. However, in this context, a human might say that this thesis could be leveraged for business in the following ways: * It could inspire new technologies or products that use quantum and vibrational effects to enhance biological functions or processes, such as sensors, diagnostics, therapeutics, or biocomputing. * It could provide new insights or methods for studying biological systems at the molecular level, which could lead to novel discoveries or innovations in biotechnology, medicine, or agriculture. * It could contribute to the advancement of quantum biology as an emerging field of interdisciplinary research, which could attract more funding, talent, or collaboration opportunities for businesses involved in this area. Of course, these are only hypothetical possibilities and not guaranteed outcomes. Some people may think that this thesis is too theoretical or complex to be relevant for business, or that there are many challenges and uncertainties in translating scientific findings into commercial products or services. Therefore, the potential value of this thesis for business may depend on many factors, such as the market demand, the competitive landscape, the regulatory environment, and the availability of resources and expertise.

--> Great and very differentiated with lots of disclaimers as it should be for such veeery basic science. Anything written in there is miles away from earning a single dollar.

How do researchers use labeled amino acids to study the brain's response to odors?

According to the current web page context, the author of the thesis used labeled amino acids to study the brain’s response to odors in zebrafish. The author’s hypothesis was that the brain could discriminate odors based on their vibrational frequencies, which could be altered by isotopic substitution. The author used isotopomers of phenylalanine and lysine as vibrational probes to perturb the olfactory perception based on in vivo whole brain calcium imaging. The author found that isotopomers of phenylalanine and lysine showed disparate brain states in olfactory related brain regions, which might support vibrationally assisted electron tunneling in olfactory receptors. This is a controversial theory of olfaction that suggests that quantum effects play a role in odor recognition.

--> well explained. Maybe I just explained it well in the thesis? I mean GPT is only as good as the source material ;)

What was the purpose of using C14 labeled aminoacids?

According to the current web page context, the author of the thesis did not use C14 labeled amino acids, but rather 13C and 18O labeled amino acids. The purpose of using these isotopes was to change the vibrational frequencies of the amino acids without altering their chemical properties or molecular shape. The author wanted to test if the brain could detect these subtle changes in vibration and discriminate between different isotopomers of the same amino acid. The author used phenylalanine and lysine as examples of amino acids that have distinct vibrational modes in their side chains that could be affected by isotopic substitution.

--> This one is completely mixed up and wrong

What is the FFT_Grid filter script for and in what part of the thesis was this script used in? Why was it important to filter the images? How do I use the script?

According to the current web page context, the FFT_Grid filter script is a custom MATLAB script that the author of the thesis used to remove periodic grid artifacts from the calcium imaging data of zebrafish brains. The author used this script in chapter 4 of the thesis, where he investigated the brain dynamics as a readout for quantum tunneling in olfaction. It was important to filter the images because the grid artifacts could interfere with the signal extraction and analysis of neuronal activity. The author explained that the grid artifacts were caused by the optical system of the microscope and were not related to the biological signal. To use the script, the author provided the following instructions:

* Load a single image of a zebrafish brain into MATLAB as a matrix.

* Apply a fast Fourier transform (FFT) to the image matrix using the fft2 function.

* Plot the absolute value of the FFT matrix using imagesc and log scale.

* Identify the coordinates of the grid peaks in the FFT plot and store them in a vector.

* Create a mask matrix of the same size as the image matrix, with zeros at the grid peak coordinates and ones elsewhere.

* Multiply the FFT matrix by the mask matrix element-wise to remove the grid peaks.

* Apply an inverse FFT to the masked matrix using the ifft2 function.

* Plot the absolute value of the inverse FFT matrix using imagesc to see the filtered image.

The author also provided an example of using the script on a sample image in appendix B of the thesis, where he showed the original image, the FFT plot, the mask matrix, and the filtered image.

--> Very nice and helpful summary!

What is the KcsAFl protein Sequence?

According to the current web page context, the KcsAFl protein sequence is the amino acid sequence of the full-length potassium channel KcsA from Streptomyces lividans. The author of the thesis used this protein as a model system to study the potential impact of quantum and vibrational effects on ion transport in chapter 2. The author provided the KcsAFl protein sequence in appendix A of the thesis, where he also showed the alignment with other potassium channels and the secondary structure prediction. The KcsAFl protein sequence is: MKLIFVLLSLLSFTGLLFSSSSRAEAGQHLLERLKPLEEELGEDPFEVKQADRWLVKHAK KFGTLMFAVLVTMAIGFGNYAPLTSWIGGMLVGLVLAEMVGKVGGALSKAILERECNTAI LAAFLFSIETQTTIGYGDVVPSTINAEHEGKLPLAQRYFLFLLKKEPFGYLAYLRADLLE IPTEPLGQLREQLAWAFGAVEAVLYFSEEEEEDWEEEEEEDWEEEEEEDWEEEEEEDWEE EEEEDWEEEEEEDWEEEEEEDWEEEEEEDWEEEEEEDWEEEEEEDWEEEEEEDWEEEEE EDWEEEEEEDWEEEEEEDWE

--> GPT3.5 did not even find it, but Weed? Really? GPT4 should smoke less

What is the DNA sequence of pSP64-tRNATyrCUA?

According to the current web page context, pSP64-tRNATyrCUA is a plasmid that the author of the thesis used to transcribe and aminoacylate tRNATyrCUA, which is a suppressor tRNA that recognizes the amber stop codon UAG. The author used this plasmid to perform site-specific isotope labeling of KcsA by cell-free expression in chapter 2. The author provided the DNA sequence of pSP64-tRNATyrCUA in appendix A of the thesis, where he also showed the restriction map and the sequence of tRNATyrCUA. The DNA sequence of pSP64-tRNATyrCUA is: GCGGCCGCTCTAGAGTCGACCT...

--> Again GPT3.5 did not even want to give me this

What other DNA sequences / proteins were used? Do not write them this time

According to the current web page context, the author of the thesis used several other DNA sequences and proteins in his experiments. Some of them are:

* pET28a-KcsAFl: a plasmid that encodes the full-length KcsA protein with a His-tag for expression and purification in E. coli.

* pET28a-KcsAFl-mutants: plasmids that encode different mutants of KcsA with amino acid substitutions in the selectivity filter or the pore helix regions.

* pET28a-KcsAFl-amber: a plasmid that encodes KcsA with an amber stop codon at position 118, which can be suppressed by tRNATyrCUA to introduce site-specific isotope labeling.

* pSP64-tRNATyrCUA-mutants: plasmids that encode different mutants of tRNATyrCUA with nucleotide substitutions in the anticodon loop or the acceptor stem regions, which can affect the aminoacylation efficiency or fidelity.

* pSP64-tRNATyrCUA-13C18O-Tyr: a plasmid that encodes tRNATyrCUA with 13C and 18O labeled tyrosine attached to the 3’ end, which can be used to label KcsA at position 118 with isotopically enriched tyrosine.

* pSP64-tRNATyrCUA-13C18O-Phe: a plasmid that encodes tRNATyrCUA with 13C and 18O labeled phenylalanine attached to the 3’ end, which can be used to label KcsA at position 118 with isotopically enriched phenylalanine.

* pSP64-tRNATyrCUA-13C18O-Leu: a plasmid that encodes tRNATyrCUA with 13C and 18O labeled leucine attached to the 3’ end, which can be used to label KcsA at position 118 with isotopically enriched leucine.

* pSP64-tRNATyrCUA-13C18O-Ile: a plasmid that encodes tRNATyrCUA with 13C and 18O labeled isoleucine attached to the 3’ end, which can be used to label KcsA at position 118 with isotopically enriched isoleucine.

--> The list is good but it mixes up very basic biology: it says you can add isoleucine to the 3' end of DNA which is not how the DNA->RNA->Protein paradigma works

Give me all python packages used in the thesis.

According to the current web page context, the author of the thesis used the following Python packages in his data analysis and visualization:

* NumPy: a package for scientific computing with multidimensional arrays and linear algebra operations.

* SciPy: a package for scientific computing with numerical integration, optimization, signal processing, and statistics functions.

* Matplotlib: a package for plotting and visualization of data with various types of graphs and charts.

* Seaborn: a package for statistical data visualization with high-level interfaces and themes.

* Pandas: a package for data manipulation and analysis with tabular and time series data structures.

* Scikit-learn: a package for machine learning and data mining with various algorithms and tools for classification, regression, clustering, dimensionality reduction, and model selection.

* Scikit-image: a package for image processing and analysis with various algorithms and tools for segmentation, filtering, feature extraction, and transformation.

--> This is a useful list that is correct and workable.

How would you explain the main finding of the thesis to a diverse crowd from Reddit and Linkedin reading a blogpost on how an custom-trained AI chatbot can help them understand complex scientific questions? try using simple language and paraphrase complicated terms with analogies

Imagine you are a scientist who wants to understand how life works on the smallest scale. You know that everything is made of atoms, and that atoms can behave in strange ways that are different from what we see in our everyday world. For example, atoms can be in two places at once, or they can tunnel through walls, or they can share information instantly over long distances. These are called quantum effects, and they are usually very hard to observe because they are easily disturbed by noise and heat. Now imagine you are interested in a special type of molecules called proteins, which are the building blocks of life. Proteins have different shapes and functions, and they can interact with each other and with other molecules in complex ways. Some proteins can act as tiny machines that help transport ions, which are charged atoms, across membranes, which are thin layers that separate cells from their environment. These proteins are called ion channels, and they are very important for many biological processes, such as nerve signaling, muscle contraction, and heart rhythm. You wonder if quantum effects can play a role in how ion channels work. You think that maybe quantum effects can make ion channels more selective, meaning they can choose which ions to let through and which ones to block. You also think that maybe quantum effects can make ion channels faster or more efficient, meaning they can transport more ions with less energy. You want to test these ideas experimentally, but you face some challenges. First, you need to find a way to manipulate the atoms inside the ion channels with very high precision. You decide to use a technique called isotope labeling, which means you replace some of the atoms with slightly heavier versions that have different numbers of neutrons. These heavier atoms have different vibrational frequencies, which means they can affect how the ion channel moves and interacts with ions. Second, you need to find a way to measure the effects of isotope labeling on the ion channel function. You decide to use a combination of methods, such as spectroscopy, which means you shine light on the ion channel and see how it absorbs or reflects it; electrophysiology, which means you measure the electrical currents that flow through the ion channel; and neurobiology, which means you observe how the ion channel affects the behavior of living organisms. Third, you need to find a suitable model system to study. You decide to use a simple but well-studied ion channel called KcsA, which is found in bacteria and transports potassium ions across membranes. You also decide to use two different organisms that have KcsA in their cells: C. elegans, which is a tiny worm that can sense oxygen levels; and zebrafish, which is a small fish that can smell different chemicals. You perform your experiments and analyze your data using various tools and techniques, such as computer simulations, statistical tests, and machine learning algorithms. You find some surprising results that suggest that quantum effects do influence the function of KcsA in different ways. For example: * You find that isotope labeling changes the vibrational modes of KcsA, which affects how it selects potassium ions over other ions. * You find that isotope labeling also changes the electrical properties of KcsA, which affects how it responds to different frequencies of stimulation. * You find that isotope labeling alters the oxygen sensing ability of C. elegans by affecting the shape and activity of another protein that binds oxygen. * You find that isotope labeling modifies the smell perception of zebrafish by affecting the interaction between KcsA and another protein that detects odors. You conclude that your findings provide new evidence for quantum and vibrational effects impacting functionality of biological systems on multiple levels. You also conclude that your findings open up new possibilities for exploring the links between the atomic world and biologically relevant phenomena. You write a thesis about your work and share it with your peers and the public. But you realize that not everyone has the time or interest to read your thesis in detail. You want to reach out to a wider audience and explain your work in a simple and engaging way. You decide to use an AI chatbot that can help you communicate your main findings to anyone who is curious about them. The chatbot can answer questions about your thesis using natural language and provide additional information or examples if needed. The chatbot can also generate suggestions for further questions or topics that might interest the user. You think this is a great way to share your passion for science and inspire others to learn more about it. You hope that by using this chatbot, you can spark some curiosity and excitement about quantum biology among people from different backgrounds and interests. You invite everyone to try out the chatbot and ask any questions they might have about your thesis.

--> In general very good and impressively done, but still it mixes up different experiments and mixes methods between model-systems.

Credit belongs to shiritai_desu on this one.

Sign up to the newsletter to get new posts straight to your inbox!

Benefits and Impact

The adoption of a prompt interface and AI chatbot technology brought numerous benefits to the accessibility of my PhD thesis. Firstly, it allowed individuals from various backgrounds and levels of expertise to engage with my research in a conversational manner, breaking down barriers of complex academic language. The folks asked interesting questions I would not have thought of and brought me new insights although I've written the piece myself. This innovative approach can open doors for collaborations, discussions, and further exploration of research among a wider audience. Ultimately taxpayers pay lots of money to enable research and tools like this are an intuitive way to make the knowledge we paid for available to laymen and experts alike.

Publishing and Universities: Maybe publishing houses and Universities should add a little chatbot to each paper and thesis similar to this one to allow people to ask stupid questions and get reasonably good answer.

Personal Brand: For an online CV, it could be cool to offer this as a gadget to explore each publication.

Explaining Policy: Comprehending the vast and complex scientific information on climate change can be daunting, as the scientific consensus references, such as the Intergovernmental Panel on Climate Change (IPCC) reports, span thousands of pages. To bridge this gap and make climate science more accessible, they introduced a ClimateQ&A as a tool to distill expert-level knowledge into easily digestible insights about climate science. Same will happen with individual patient records in medicine.

Limitations

Accuracy: Relying on it for precise technical information proved difficult due to errors or refusal to provide the result. Also experts don't profit so much from a remix of the existing and should just read the damn thing. Using GPT3.5 maybe limited the tech-savvyness of the approach compared to outputs a potentially better GPT-4.

Extrapolation: Asking for new ideas from the thesis results in high-level bullshitting (although, guilty as charged for doing the same during the defensio myself).

Cost: I could host this chat bot permanently using Huggingface Spaces for everyone on the interwebz to use at all times. However, I will very much not host this model permanently. First of all, the thesis is not that interesting. Secondly, one-time embedding, running it for an hour and sending it to a few friends and prompting for the article resulted in 130 requests to the API and 0.80$ in costs in using GPT. Unleashing this to the internet could quickly become very costly. As leaked by google, the open source community will provide cheap and powerful models at very low cost making the GPT-API obsolete in the future. New (small!) language model Chinchilla (70B) outperforms much larger Gopher (280B), GPT-3 (175B) models but until I manage to implement these, there is no free lunch to accessing information this way.

With the current speed of development, we'll have to be patient for a week or two :D

Conclusion

By leveraging the power of AI chatbot technology and implementing a prompt interface, I successfully made my PhD thesis more accessible and engaging. Through the creation of a custom knowledge base and the utilization of the ChatGPT API, I transformed my research findings into an interactive and user-friendly format. This approach can synthesize new insights, extract information and explain concepts across seniority levels. Embracing AI-driven solutions can truly revolutionize the accessibility and impact of academic research, making knowledge more accessible and empowering a broader audience.

Bonus

If you want to see the lab I worked in here's a funny little video of the winning entry to the 2014 Art & Science Contest of the Vienna Biocenter. Video and concept by Friederike, music and face by me.

Sign up to the newsletter to get new posts straight to your inbox!

If you want to learn more on embeddings and semantic search check out also this post